In the previous post I covered the ideas behind tidbit. In this post I will try and cover the technical aspects of the tidbit system. Currently the work is very exploratory, so everything may change.

Tidbit record structure

This is a typical tidbit which was generated using the Rhythmbox plugin:

TIDBIT/0.1; libtidbit/0.1; Rhythmbox Tidbit Plugin v0.1

tidbit_userkey==usePzEg4Cl4g1ASdzpssVHtQ1hJJilS+ryiBWjF...

tidbit_table==audio/track

tidbit_created:=1281479640

tidbit_expires:=1313037240

artist==Arcade Fire

title==Keep The Car Running

album:=Indie/Rock Playlist: May (2007)

genre:=Indie

year:=2007

play_count:=34

rating:=0.8

tidbit_signed:=JyJ1fIwhRL5t3y9CACmshm/UibYVhvInxh7XVx4...

The first line is the header. It states the version of the tidbit followed by the user agent. The rest of the record is composed of key-value pairs. The key has a strict format of lower-case letters and underscore. The value can contain any character above 0x1F, and is terminated by a new line. Other characters must be escaped. The first four pairs are compulsory and they all contain “tidbit_” at the start to distinguish them from normal data. The userkey is a unique(ish) 1024 bit RSA key the user uses to identify themselves and also serves as the public portion of their signing key. It is base64 encoded and in the text above it is clipped but in reality it is over 100 characters long. Table is compulsory field which designates the subject matter. The created and expires values state when the record was created (must be in the past) and when it will expire. Expired records are no longer valid. These are currently using Unix time, but a more general format will be used in the future. This is followed by a number of values specific to the record type. Finally, the record is completed by a signature which signs the body up to that point (also base64 encoded). The signature is generated using the user key which signs an SHA512 hash of the record (up to that line). There is a hard limit of 2KB per record to prevent abuse.

The separation between the key and the value is either ‘==’ or ‘:=’. These signify if to search for that value, or overwrite the value. When inserting a new record, a search is performed for any records which match all the key/value pairs with the ‘==’ separator. These records are discarded as they are overwritten by the new record. To ensure the correct sequence in cases where an old record is re inserted into the database, the created date is checked. This allows a record to be updated by destroying an older version.

Library

A library (libtidbit) handles most of the complexity of creating tidbits, key handling, communicating with databases and performing queries. Keys are stored in a gnome-keyring. There are also python bindings which make creating plugins simple. Here is partial mock-up of an example use in Rhythmbox:

In this plugin, forming tidbits and passing the out is very easy. Presenting the data is the hard part.

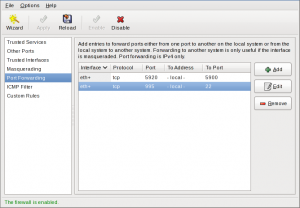

Databases

There are several database backends used in tidbit:

- Memory database is used to cache recently accessed records.

- Fork database is not a real database but rather a connection to two, which fetches records from the local database to minimise long distance transactions.

- D-Bus database is a service which allows several applications to share a single cache, and minimise external accesses.

- HTTP database is the method used for long distance transactions with the global servers.

- Sqlite database allows cached records to be saved between sessions.

The default database supplied for libtidbit access is a caching fork of a memory database and a D-Bus connection. The D-Bus service wakes up automatically to connect the applications to the global servers.

There are just three database commands at the moment:

- Insert to push new tidbits into the system

- Query to ask for tidbit GUIDs which match a query

- Fetch to get the full record from a GUID

The GUID is actually the signature and is unique(ish) to each record.

Example

Lets do a 2 minute into of how to create and post a tidbit for a fictional TV application. The following should be the same in both C and Python (although C requires types).

Step 1: Get a key

key = tidbit_key_get ("mytv", "MyTV v1.2");

Here we supply the name of out application twice. The first should never change so we pick up the same key each time, and the second is used for the user agent.

Step 2: Get a database

database = tidbit_database_default_new ();

This gets the default database on the system.

Step 3: Create the record

record = tidbit_record_new ("television/episode");

This creates a new record we can put data into. The table name is compulsory so we supply it here.

Step 4: Add the data

tidbit_record_add_element (record, "series_name", "Ugly Betty", TIDBIT_RECORD_ELEMENT_TYPE_KEY);

tidbit_record_add_element (record, "episode_name", "The Butterfly Effect (Part 1)", TIDBIT_RECORD_ELEMENT_TYPE_KEY);

tidbit_record_add_element (record, "rating", "0.6", TIDBIT_RECORD_ELEMENT_TYPE_VALUE);

Note the difference between the key and value entries (as the ‘==’ and ‘:=’ before). We may change our rating later, so that is a value, and so overwrite the records which match on the keys.

Step 5: Sign the record

tidbit_record_sign (record, key);

Once a record is signed, it cannot be altered.

Step 6: Insert it into the database

tidbit_database_insert (database, record);

Step 7: Tidy up

tidbit_record_unref (record);

Now we are finished with this record, we free it. By now, the record is happily on its way around the world.

Development

If you have interests in the semantic web/distributed hashtables, you have an idea for an awesome application, you found a fundamental error or you just want to have a bit of a play, then the source is available.